The AI-Act in depth

In our previous blog, “What is the AI Act?“, we explored the definition of AI according to the AI-Act, how to classify systems into three different risk categories: unacceptable-, high-, and low risk and their consequences. This blog will dive further into the AI-Act and explore its position in the context of global AI regulations, the ongoing discussions, and key dates associated with the AI-Act.

Global AI Legislation Efforts

The EU is not the only legislator attempting to regulate AI, with various countries taking different approaches and levels of regulation. Take Japan as an example; their legislative effort in regard of AI has been relatively limited, especially when considering that they were pioneers in the field of developing AI. In Japan, the primary initiative attempting to regulate AI is the “Social Principles of Human-Centric AI,” which emphasizes ethical considerations but lacks concrete regulatory measures policies to govern AI applications.

The United States has been more proactive in introducing AI-legislation. The “AI Bill of Rights,” focuses on safeguarding individual rights in the context of AI technologies. Additionally, there is the “Algorithmic Accountability Act” and the “Data Protection & Privacy Act,” both proposed to address the challenges related to accountability, transparency, and data privacy in AI applications. Even more recently, the president of the United States, Joe Biden, signed an executive order aimed to sets new standards for AI safety and security and privacy.

In contrast to the United States, China has taken a different path. Their legislative efforts are focused on ensuring that AI models, like their localized ChatGPT variant, do not share sensitive information, particularly concerning the Uyghurs, to their users.

Brazil is still in the early stages of their AI regulation. Brazil’s approach may resemble a “copy and paste” of the EU’s regulatory framework. This might entail adopting similar principles, guidelines, and policies to ensure the responsible and ethical use of AI within the Brazilian context.

Currently, the AI Act of the European Union is anticipated to be the most far-reaching legislative effort, meaning that if a company complies with the AI-Act, it automatically complies with all the other regulations around the world.

What about the ongoing discussions?

There is still some discussion on how to exactly include ‘foundation models’, such as ChatGPT, into the AI-Act. In some instances, the AI-Act requires documentation outlining the exact purpose of the AI system. However, certain models, including ChatGPT don’t have a specific purpose of creation apart from being designed to perform a wide array of tasks. How legislators will handle this problem has yet to be decided. Complicating this matter further is that it has yet to be decided what exactly constitutes a foundation model. Parameters, computational power, and data sources are all potential criteria to determine what constitutes a foundation model, however a final decision is yet to be reached.

Another debate revolves around the position of biometric recognition systems. The discussion focusses on a delicate balance between the potential for enhancing security and the significant privacy implications these systems pose. This discussion has been ongoing since the initial draft of the AI-Act.

On the one hand, biometric recognition systems, such as facial recognition technology, have proven to be powerful tools for law enforcement and security agencies. These systems can help to identify and track criminals, locate missing persons, and prevent terrorist attacks. The ability to match faces or other biometric data against databases can lead to the apprehension of dangerous individuals leading to a safer society.

On the other hand, the widespread use of biometric recognition systems raises substantial privacy concerns. These systems have the capacity to collect and process sensitive personal data without individuals’ knowledge or consent. The constant surveillance and potential for misuse raise questions about the erosion of privacy and civil liberties. Privacy advocates argue that unrestricted use of biometric recognition can lead to mass surveillance, chilling effects on free expression, and the abuse of power by governments and corporations.

Key Dates

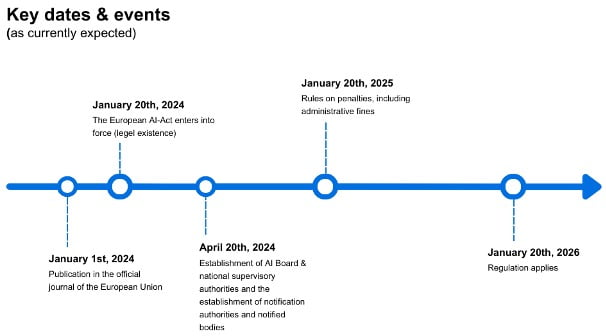

What further intensifies these debates is the impending deadline of January 1st, 2024, which adds a sense of urgency to the discussions. January 1st deadline is a self-imposed deadline. This date marks the publication of the Official Journal of the European Union, which occurs only twice a year. All new regulations are published in this Journal. Missing this deadline would result in a significant half-year delay in the enactment of this legislation, something the legislator will try to avoid. Other key-dates are described in Article 85 of the AI-Act. Provided the deadline of January 1st is met, the timeline would look as follows:

Final Remarks

Our analysis offered a glimpse into the global legislative context in which the AI-Act is developed and the ongoing dialogues. Clever Republic is committed to staying up-to-date with the latest developments in this regulatory landscape. We will continue to monitor the evolving discussions closely and provide you with timely updates!

Want to know more about the AI-Act, or do you need help with governing your AI-models? Don’t hesitate to reach out via email or the contact form on our website!