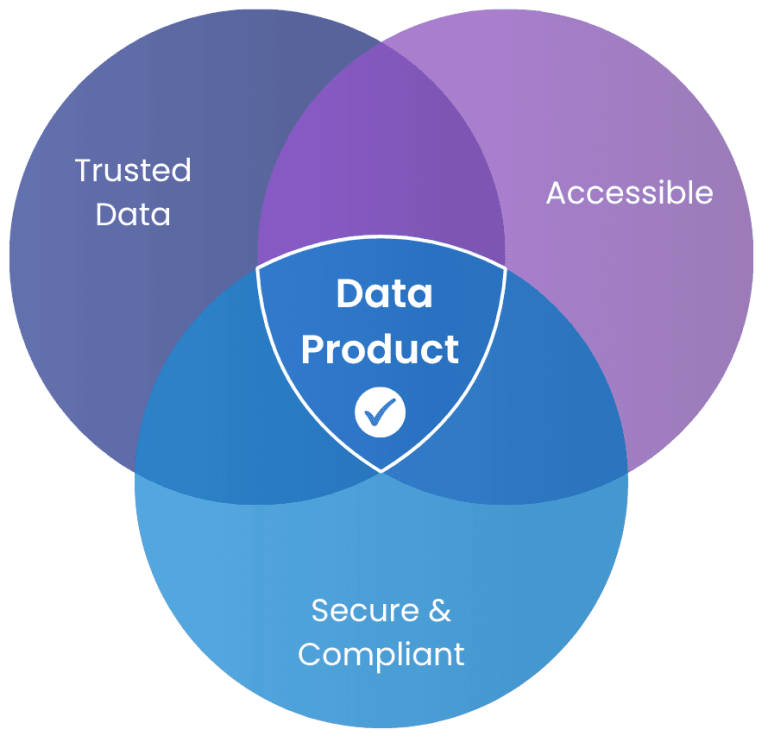

Most organisations still treat Data Quality as a checklist. Something to sort out after the data lands in a warehouse. But that approach no longer fits. Today, data plays a strategic role as a Data Product. It is built with purpose, owned with intent, and expected to deliver trust.

At Clever Republic, we define Data Quality rules by starting at the end. We use backwards thinking. Instead of beginning with data pipelines or technical structures, we begin with what the business needs. What outcome should the Data Product achieve? What can go wrong if the quality is poor? Those questions shape the rules that matter.

Begin With the Outcome

Traditional Data Quality methods focus on source data. Our approach does the opposite. We start from the business decision that depends on the Data Product. Then we ask what the product must guarantee, and which quality rules protect that outcome.

Let us look at three example Data Products built for our fictional online supermarket company, Groove. Each supports a different business goal. Each requires a different approach to Data Quality. But all benefit from the same backwards logic.

Data Product: Pension Payout

This Data Product calculates monthly pension payments for Groove employees. It transforms payroll and HR data into trusted financial outcomes. The business promise is clear: each employee must receive the correct amount, on time, every month.

We start by identifying what could break that promise. An ineligible employee might receive funds. An employer could over-contribute, causing regulatory issues. Or an investment might be undervalued, leading to accounting errors.

That is why we work backwards from these outcomes and define targeted rules using SODA, a Data Quality monitoring tool designed for operational pipelines.

To protect eligibility logic, we enforce a minimum retirement age:

- failed rows:

name: "Employee age should match the assigned cohort age range"

fail condition: (YEAR(CURRENT_DATE()) - YEAR(Date_Of_Birth)) NOT BETWEEN MIN_AGE AND MAX_AGE

To ensure financial limits are respected, we cap employer contributions:

- failed rows:

name: "Employer match must not exceed 5% of annual salary"

fail condition: Employer_Match > 0.05 * (SELECT Salary FROM Employees WHERE Employees.Employee_ID = Investment_Contribution.Employee_ID)

To avoid misstatements in investment value, we check:

- failed rows:

name: "Current value of investment must not be lower than the invested amount"

fail condition: Current_Value < Invest_amount

These rules do not start from column-level checks. They start from the value this product must deliver, and the trust it must uphold.

Data Product: Greenhouse Gas Impact

This product helps Groove report emissions across Scope 1, 2, and 3. It supports regulatory compliance under CSRD and shapes sustainability strategy. Errors in this product can lead to legal risk and reputational damage.

To define Data Quality rules here, we start with what must be reported. All emission sources need to be tracked. Factors must align with approved standards. Reporting must happen every quarter, without gaps.

To protect against sudden and unexplained drops in emissions reporting that might indicate missing data or incorrect calculations we use:

WITH emissions_with_lag AS (

SELECT

year,

Total_TCO2e,

LAG(Total_TCO2e) OVER (ORDER BY year) AS prev_year_TCO2e

FROM @gold.ghg_emissions_total_GreenhouseGasImpact )

SELECT *

FROM emissions_with_lag

WHERE Total_TCO2e < 0.8 * prev_year_TCO2e

And we prevent incomplete or invalid emissions values from entering reports:

SELECT *

FROM @gold.ghg_emissions_total_GreenhouseGasImpact

WHERE otal_TCO2e IS NULL OR Total_TCO2e < 0

These rules ensure traceability, consistency, and audit readiness. They do not exist to serve the data team. They exist to serve legal teams, compliance officers, and sustainability managers who depend on accurate reporting.

Data Product: Customer Churn

Groove’s churn model does more than crunch numbers. It forecasts which customers are about to leave and helps marketing intervene with tailored campaigns. But if the underlying data is off—even slightly—the model misses the mark. Predictions fail, budgets are wasted, and trust evaporates.

That is why we do not start with features or model accuracy. We begin by asking: what must go right for this prediction to be useful? From there, we define what data must be protected, measured, and monitored.

Using Collibra Data Quality, we map those expectations to targeted checks. Take tenure, for example. A negative value here would suggest a customer has been with the platform for negative time. It sounds absurd, but it happens—and it breaks downstream logic.

SELECT *

FROM @customer_churn

WHERE TENURE <= 0

Another red flag is around categorical fields. Marketing relies on segmentation—device type, payment preferences, shopping behaviour. So we lock down those fields to known values. For instance:

SELECT *

FROM @customer_churn

WHERE PREFERRED_PAYMENT_METHOD NOT IN ('Cash on Delivery', 'Credit Card', 'PayPal', 'Debit Card', 'Bank Transfer')

We also check that satisfaction scores are realistic. This is a key feature in the model and a strong signal for churn. If the values fall outside expected bounds, the predictions quickly become unreliable.

SELECT *

FROM @customer_churn

WHERE SATISFACTION_SCORE < 1.00 OR SATISFACTION_SCORE > 10.00

These checks ensure the Customer Churn Predictor delivers more than just probabilities. They protect the quality of its decisions. Every validation supports a business-critical goal.

Rules With Purpose

When you define Data Quality rules from the Data Product backwards, you create rules that protect outcomes. These rules are not generic. They are specific to what the business needs from each product.

A Data Product is a promise. Data Quality rules protect that promise. They prevent small data issues from turning into large business risks. They give teams confidence to act based on data.

This approach ensures every rule has a purpose. It creates visible value. It gets buy-in from business stakeholders because they see how quality supports their goals.

A Better Way to Define Quality

Too often, organisations apply the same set of rules to every dataset. The result is a bloated list of checks with no clear benefit. Teams lose motivation. The business sees no impact.

Backwards thinking changes that. By focusing on the product, the purpose, and the people who rely on the data, we define smarter rules. Each one is traceable to an outcome. Each one improves trust.

At Clever Republic, we embed this mindset into every project. We connect Data Quality with Data Governance and Data Intelligence to create products that deliver value from day one.

Time to Rethink Your Rules?

If you are still defining Data Quality from the top down, now is the time to flip the approach. Start with the outcome. Identify what must go right. Then define the rules that ensure it does.

Want help applying this approach to your Data Products? We are ready to support you.

At Clever Republic, we bring strategy, governance, and technology together to make your Data Products trusted and future-proof.